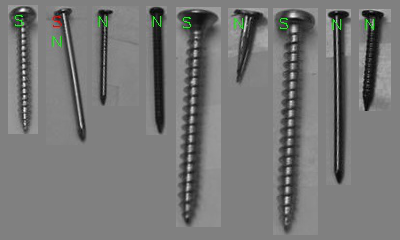

I’ve had this idea, that I have been working on for awhile, to make a system that can recognize fasteners (screws, nuts, bolts, nails, washers, etc) on the fly and then measure some of the descriptive statistics of the parts (e.g. length, width, inner and outer diameter etc). Today I finally got a prototype of the recognition system up and running. The system uses a raw threshold of the scene to extract the parts from the scene background and then does some operations to get the parts into a standard form (namely aligned to the major axis) and then extracts some basic statistics like edge length and orientation histograms, and Hu moments. This feature vector then gets piped into a support vector machine to do the recognition. Right now the system runs at about 8FPS at full resolution. The training error on the SVM was about 13% but the training data was really, really, poor and not that large (i.e. 75 samples with about 10% of those being basically misfires from an automatic data extraction module I wrote). I still have a long way to go, as the feature extractor could use some work and the whole data processing pipeline needs to be optimized. Right now there are some fairly costly image rotation operations that can be modified to improve performance. I also need to train the full set of features not just bolts and nuts.

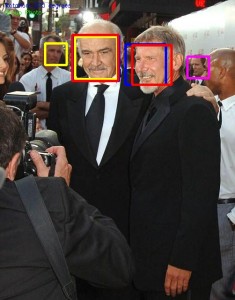

Once the recognition system is working well I hope to use the ALVAR augmented reality library and its fiducials to determine the part dimensions by assuming that the part is effectively planar with the fiducial. The fiducial should also give us a three dimensional location for the recognized part. Right now I am doing this work for the CGUI lab at Columbia. Our end game is to wrap this code up into an augmented reality system for maintenance applications where there may be knowledge shared between a remote subject matter expert and an on-site maintenance technology. Our hope is that a system like this can speed up maintenance tasks by assisting the maintainer in identifying parts and locating them faster. Part of the problem of using AR in this domain is that head mounted displays really don’t have all that great of resolution which reduces your visual acuity and makes it difficult to recognize individual parts.