I had a moment to play with steganography while I was watching tv tonight. Steganography is a way of encoding text messages inside image files in such a way that they don’t alter the original image content. I came across this nice little tutorial using the stepic python library. I was able to get Stepic working with and hooked into SimpleCV with only a little bit of massaging (needed to turn SimpleCV Image to a PIL Image and back again). Here is the actual commit.

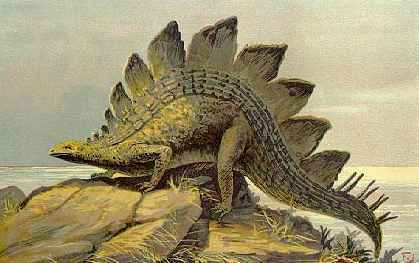

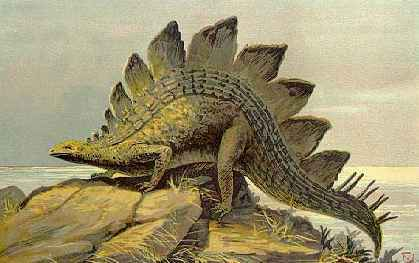

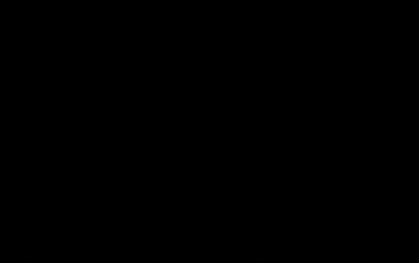

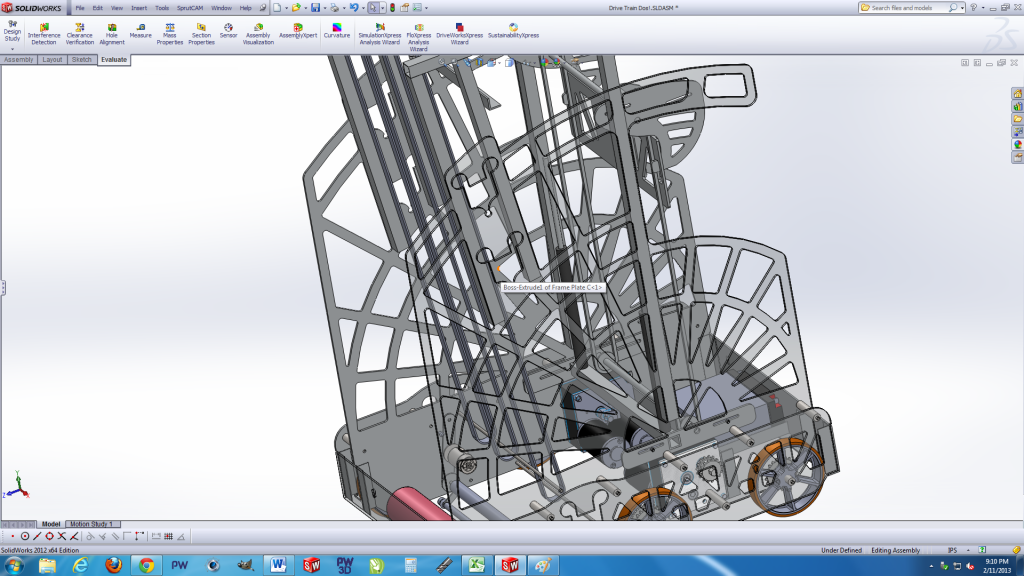

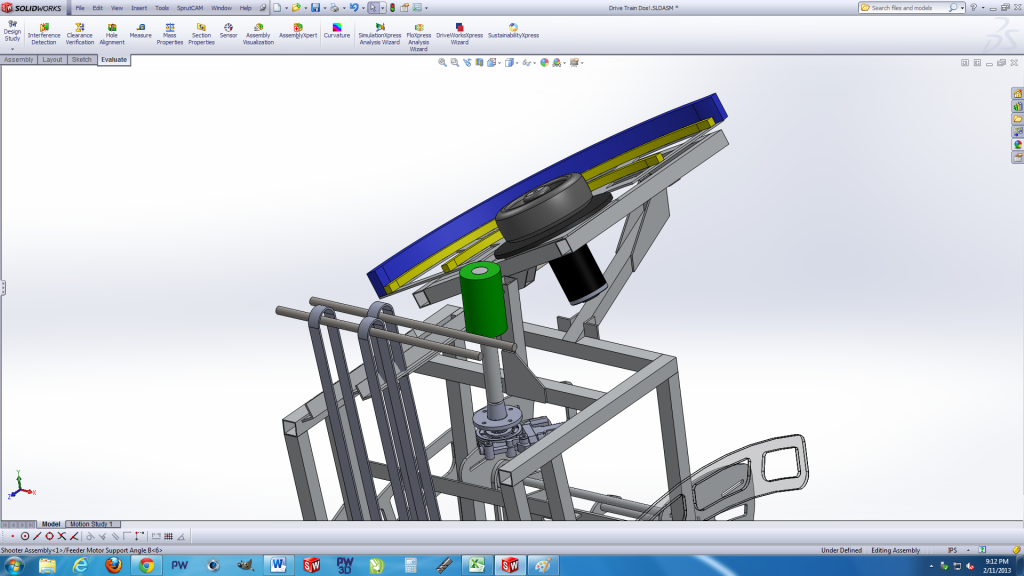

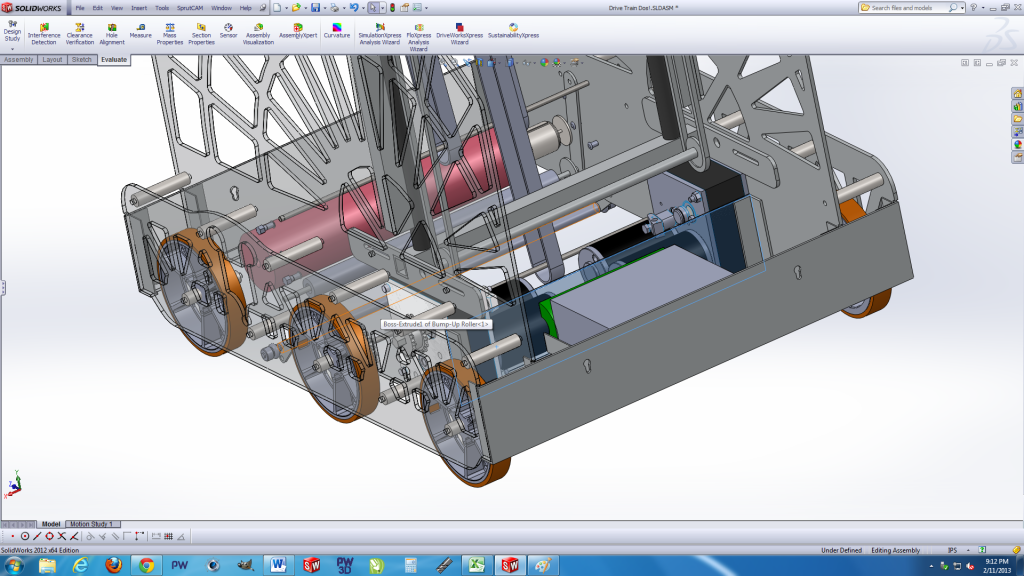

I wrote a little bit of code to test my work and to see if I could tease out the algorithm. Basically all I did was encode a message, in this case the wikipedia entry on stegosaurs, into an image and then subtracted that image from the original imaage to create a diff. To the naked eye both the source image and the encoded image look the same. The diff also looks as it should, that is to say all black. To dig a bit deeper I applied a histogram equalization function to stretch out the images’s dynamic range. Bingo, the encoding scheme is clearly visible.

The next step is to look at the individual color channels to see if they are holding on to any information. I could look at the actual algorithm in the source, but that would be no fun. It would be interesting to see if I could build a heuristic for determining which images have encoded data. I would also be useful to add AES encryption to the encode/decode calls in SimpleCV.

As a side note I tried to decode my image using this on-line utility but I had no luck. My guess is there are incompatibilities between the stable 0.3 release of the stepic library and the development 0.4 release that might be on the utility.