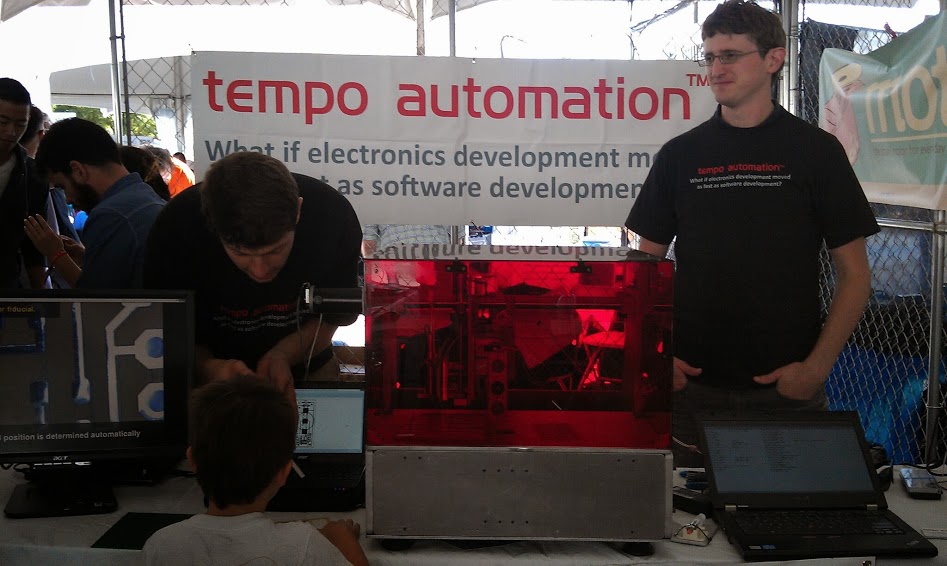

I quit my well paying job as a hired gun for post-funding start-up in a Boston to return to the world of eating boiled news papers and sleeping on the floor of a hacker squat. I feel like I have caught my breath and I am ready to try again. After a drawn out courtship I have decided to come on as the software lead and co-founder of Tempo Automation. We’re after the very seductive idea of building robots and helping individuals and other engineers build, design, and test their own printed circuit boards (PCBs). The immediate goal is to put a pick and place robot that can go in every hacker space and engineering office around the world. Every shop with a 3D printer and a laser cutter should have one of our machines. The end-game is to convert these simple robots from merely a pick-and-place to a one stop PCB factory that does milling, solder deposition, pick and place, reflow, and ultimately AOI, programing, and testing. Raw materials go in one side, PCBs come out the other side. Idea bits get converted into atoms. We want to do for electrical engineering what the Rep-Rap and Makerbot did for mechanical engineering.

I am really, truly, excited to be working on an interdisciplinary team once again. Robotics is a field where people still take pride in using the word “engineer” in their title. When I say engineer I mean people who want to fix problems; not just wax brass on the Titanic by writing bank software or slick advertising webpages. Being a big “E” engineer means you get to put down the mouse, pick up a multimeter and some hex keys, and build some awesome. I feel like I couldn’t have found a better set of co-founders. Jeff McAlvay embodies everything I want in a non-technical founder: he knows how to run a business but he doesn’t want to be an executive. Jeff is sincere about the things that are important: solving the problem, learning the technology, and helping the customers. I am also in awe of Jeff’s time at McMaster-Carr; McMaster is such a great organization and I want to learn more about how people like Jeff made it run so perfectly. I am also excited to be working with Tempo’s other co-founder and mechanical lead, Jesse Koenig. Jesse serves as a great counter balance to my personality. He is detail orientated where I would be hand wavy, he likes to work on the books while I do community stuff, and he seems to know just when to force me to listen and when to leave me alone and code. If this were a guy-cop-buddy movie Jesse would be the good cop to my bad cop, and Jeff would be the affable but stern police commisioner providing us with the resources to clean up the mean streets of PCB City. Rounding out our team are Prof. Peter Vajda a visiting Computer Vision professor at Stanford and Jon Thorne, one of the best all around mechanical engineers I’ve met. Ted Blackman and Cody Daniels, from the 3Scan crew are also lending us their software and hardware expertise.

I am also amazed by the community that Jeff and Jesse have chosen to surround themselves with. The people at MI7 and Langton Labs are fantastic and it reminds me so much of home at Arbor Vitae. The first thing I said when I walked into the office is that it felt like home. Jeff has also done a fantastic job of organizing and growing a hardware community in San Francisco and I am really excited to not just work on this project but get the privilege to share it with others.

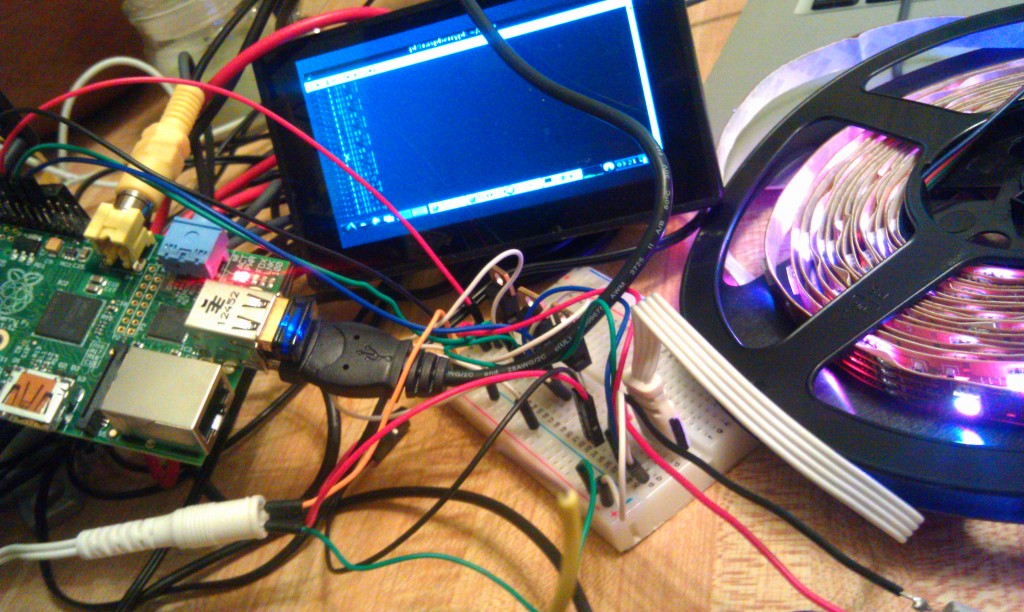

Finally, I am really excited about our technology stack as it lets me use my knowledge of computer vision within a suite of new software. Things are still solidifying but it looks like I will be working not just with a lot of open software but also a lot of open hardware (and hopefully building a lot of it too). I am looking forwarded to learning a lot about Meteor, javascript, bottle/flask, and potentially ROS in the coming weeks. There is still a lot of code to write but I can already see the parts coming together.

Will this venture make us so insanely rich that we can jump Tesla’s off our yachts? Probably not, but it is an idea with a market need where we can grow a reasonable business. We also won’t ever have to talk about our “product” as being the end-all and be-all social/mobile/local/viral cloud analytics marketplace as a service. We’re doing something that matters. Something that helps make the world a better place by enabling people to build their dreams and needs. To be sure we can’t make a business if we don’t make money, and I am sure we can, but what is more important is that we’re spending every waking moment doing something we love and that we feel really matters. Most important of all I get to spend the next few years teaching people how to build electronics and use robots to make their ideas a reality. I haven’t been this happy in a long time.